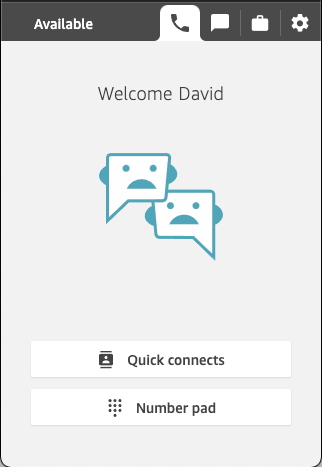

It had been years, maybe 10 years, since I had done dialer campaigns on Cisco Contact Center Express (UCCX). I recently got a chance to do a deep dive to some SFDC integration I did and I wanted to capture how to track a call via the UCCX logs. What we will be coving is an UCCX outbound campaign that is agent based and preview. Next you need a CSV or use the API to load a new call list. Login the agent to Finesse and a preview call will be presented to the agent. If the agent accepts the preview and the customer is then dialed out. Otherwise the agent could end the call or schedule a callback if it was accepted. The steps below walk you through every single step.

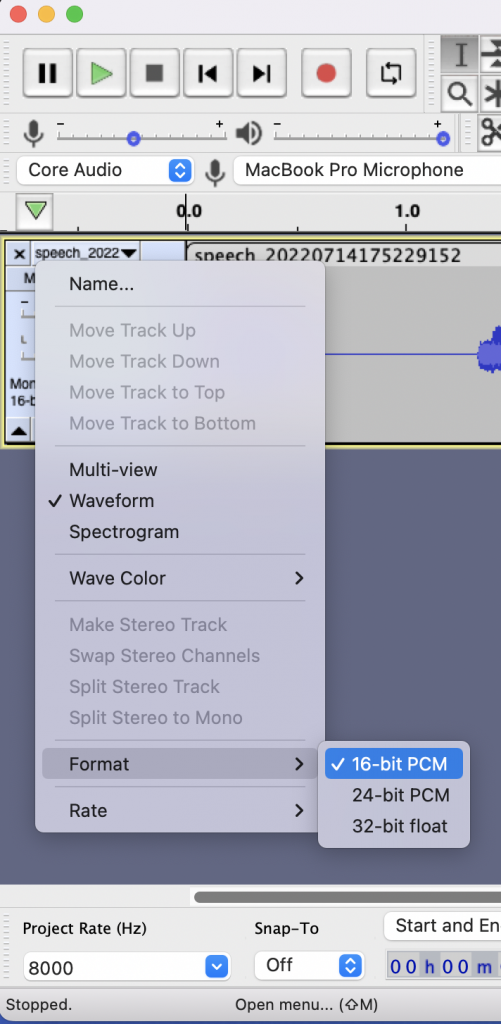

Trace levels you should have set in UCCX Serviceability:

SS_OB: Debugging, XDebugging1, XDebugging2

SS_RM: Debugging, XDebugging1

SS_RMCM: Debugging

You’re going to need RTMT and Notepad++.

- Start with getting the ANI of the record that you were going to dial and downloading the MIVR and JTAPI logs.

- Using RTMT download the Cisco Unified CCX Engine and Cisco Unified CCX JTAPI Client logs from your server for the time frame needed.

- Go to the logs downloaded MIVR directory and unzip all files and leave in place.

- Open Notepad++ Find in Files, choose the location C:\YourServer\YourTimeStamp\uccx\log and search for the ANI sent to the dialer.

Notepad++ Search Settings

- Start with any hits in your JTAPI folder. This will tell you the calls placed to your ANI and correlate it to an agent extension. The below example show 3 total calls made to that ANI by an agent. This is across 3 different JTAPI logs.

CiscoJtapi041.log

17467378: Sep 07 13:53:41.861 CST %JTAPI-JTAPI-7-UNK:(P2-ccxrmcm)[pool-26-thread-1193][(P2-ccxrmcm) GCID=(1,15759614)->IDLE]Request: connect (CSFdavidmacias, 155551, 912145551234featurePriority=1)17467379: Sep 07 13:53:41.861 CST %JTAPI-PROTOCOL-7-UNK:(P2-1.1.1.30) [pool-26-thread-1193] sending: com.cisco.cti.protocol.LineCallInitiateRequest {sequenceNumber = 41195lineCallManagerID = 4lineID = 35553globalCallManagerID = 1globalCallID = 15759614callingAddress = 155551destAddress = 912145551234userData = nullmediaDeviceName = mediaResourceId = 0featurePriority = 1}

CiscoJtapi043.log

17485516: Sep 07 14:00:54.648 CST %JTAPI-JTAPI-7-UNK:(P2-ccxrmcm)[pool-26-thread-1196][(P2-ccxrmcm) GCID=(1,15759713)->IDLE]Request: connect (CSFdavidmacias, 155551, 912145551234featurePriority=1)17485517: Sep 07 14:00:54.648 CST %JTAPI-PROTOCOL-7-UNK:(P2-1.1.1.30) [pool-26-thread-1196] sending: com.cisco.cti.protocol.LineCallInitiateRequest {sequenceNumber = 41214lineCallManagerID = 4lineID = 35553globalCallManagerID = 1globalCallID = 15759713callingAddress = 155551destAddress = 912145551234userData = nullmediaDeviceName = mediaResourceId = 0featurePriority = 1}

CiscoJtapi046.log

17516022: Sep 07 14:15:45.225 CST %JTAPI-JTAPI-7-UNK:(P2-ccxrmcm)[pool-26-thread-1200][(P2-ccxrmcm) GCID=(1,15759923)->IDLE]Request: connect (CSFdavidmacias, 155551, 912145551234featurePriority=1)17516023: Sep 07 14:15:45.225 CST %JTAPI-PROTOCOL-7-UNK:(P2-1.1.1.30) [pool-26-thread-1200] sending: com.cisco.cti.protocol.LineCallInitiateRequest {sequenceNumber = 41258lineCallManagerID = 4lineID = 35553globalCallManagerID = 1globalCallID = 15759923callingAddress = 155551destAddress = 912145551234userData = nullmediaDeviceName = mediaResourceId = 0featurePriority = 1}

- Next we need to see what the engine sees for that ANI on the same search you did before open the earliest MIVR log that shows the ANI in question. You need to find the record id for your specific call, this record id is how the outbound subsystem tracks the call. This means that while you start the search with your ANI, subsequent searching will be based on this record id. Below are two examples of how to locate the record id: 15541. Additionally, you see what was imported into UCCX. You can now validate that the data received by UCCX was the data you sent.

125439796: Sep 07 13:53:16.034 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_ReadContactsThread-750-0-ReadContactsThread] com.cisco.config.impl.ConfigManagerImpl ConfigManagerImpl-getAll():CIR[0]=ConfigImportRecord[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,implClass=class com.cisco.crs.outbound.DialingListConfig,desc=,values=[15541, 30, 5008DM, Other.wav, Agent, 912145551234, , , 92, true, -1, true, -1, true, , 2024-03-05 19:53:13.0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, false, 0, null, null, null],evalues=null]125439797: Sep 07 13:53:16.034 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_ReadContactsThread-750-0-ReadContactsThread] com.cisco.config.impl.ConfigManagerImpl ConfigManagerImpl-getAll():configObjs[0]=DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=,callbackDateTime=2024-03-05 19:53:13.0,callStatus=1,callResult=0,callResult01=0,callResult02=0,callResult03=0,lastNumberDialed=0,callsMadeToPhone01=0,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=0]

- Next we want to find out who all was offered this call. Using Notepad++ Find in Files, same location, using a regular expression with your record id: associateContactToReservedAgent.*dlc:15541.

Notepad++ Search Settings Regular Expression

- This will give you who the call was sent to and at what time. In my case you can match these 3 calls to the JTAPI calls above.

125443434: Sep 07 13:53:39.146 CST %MIVR-SS_OB-7-UNK: [MIVR_SS_OB_OutboundMgrMsgProcessor-192-0-OutboundMgrMsgProcessor] com.cisco.wf.subsystems.outbound.PreviewDialer PreviewDialer:associateContactToReservedAgent() rsrc:Rsrc Name:David Macias ID:dmacias IAQ Extn:155551 for contact:OutboundContactInfo: dlc:15541 (phoneNumber:912145551234 unformattedPhoneNumber:912145551234 timezone -360 callStartTime 0 answeringMachine false )

125505817: Sep 07 14:00:49.286 CST %MIVR-SS_OB-7-UNK: [MIVR_SS_OB_OutboundMgrMsgProcessor-192-0-OutboundMgrMsgProcessor] com.cisco.wf.subsystems.outbound.PreviewDialer PreviewDialer:associateContactToReservedAgent() rsrc:Rsrc Name:David Macias ID:dmacias IAQ Extn:155551 for contact:OutboundContactInfo: dlc:15541 (phoneNumber:912145551234 unformattedPhoneNumber:912145551234 timezone -360 callStartTime 0 answeringMachine false )

125632982: Sep 07 14:15:39.532 CST %MIVR-SS_OB-7-UNK: [MIVR_SS_OB_OutboundMgrMsgProcessor-192-0-OutboundMgrMsgProcessor] com.cisco.wf.subsystems.outbound.PreviewDialer PreviewDialer:associateContactToReservedAgent() rsrc:Rsrc Name:David Macias ID:dmacias IAQ Extn:155551 for contact:OutboundContactInfo: dlc:15541 (phoneNumber:912145551234 unformattedPhoneNumber:912145551234 timezone -360 callStartTime 0 answeringMachine false )

As you saw in the JTAPI logs and above, the same record was called multiple times. One likely reason is that an agent scheduled a callback, but how do we confirm this? This part is not as clean as I would like, but the following process should give you a decent idea if a callback was rescheduled or not.

#This is the original record being set. Notice the callbackDateTime.

125445090: Sep 07 13:53:43.726 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_SaveContactsMsgProcessor-198-0-SaveContactsMsgProcessor] com.cisco.config.impl.ConfigStubImpl configStubImpl-replace() exporting object: DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=,callbackDateTime=2024-03-05 19:53:13.0,callStatus=3,callResult=1,callResult01=1,callResult02=0,callResult03=0,lastNumberDialed=1,callsMadeToPhone01=1,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=0]

#Notice that the callbackDateTime is now set to 20:15:33. One thing to note is that while the time stamp says the server’s time zone it’s actually UTC and the callback will happen at 20:15:33 UTC.

125451126: Sep 07 13:54:33.558 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_SaveContactsMsgProcessor-198-0-SaveContactsMsgProcessor] com.cisco.config.impl.ConfigStubImpl configStubImpl-replace() exporting object: DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=,callbackDateTime=Tue Mar 05 20:15:33 CST 2024,callStatus=4,callResult=8,callResult01=8,callResult02=0,callResult03=0,lastNumberDialed=1,callsMadeToPhone01=1,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=0]

#Repeat of the above change.

125451194: Sep 07 13:54:33.853 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_SaveContactsMsgProcessor-198-0-SaveContactsMsgProcessor] com.cisco.config.impl.ConfigStubImpl configStubImpl-replace() exporting object: DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=912145551234,callbackDateTime=Tue Mar 05 20:15:33 CST 2024,callStatus=4,callResult=8,callResult01=8,callResult02=0,callResult03=0,lastNumberDialed=1,callsMadeToPhone01=1,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=0]

#Repeat of the above change.

125508618: Sep 07 14:01:06.354 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_SaveContactsMsgProcessor-198-0-SaveContactsMsgProcessor] com.cisco.config.impl.ConfigStubImpl configStubImpl-replace() exporting object: DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=912145551234,callbackDateTime=2024-03-05 20:15:33.0,callStatus=3,callResult=1,callResult01=8,callResult02=0,callResult03=0,lastNumberDialed=4,callsMadeToPhone01=1,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=1]

#New callbackDateTime set.

125514552: Sep 07 14:01:30.954 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_SaveContactsMsgProcessor-198-0-SaveContactsMsgProcessor] com.cisco.config.impl.ConfigStubImpl configStubImpl-replace() exporting object: DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=912145551234,callbackDateTime=Tue Mar 05 20:30:30 CST 2024,callStatus=4,callResult=8,callResult01=8,callResult02=0,callResult03=0,lastNumberDialed=4,callsMadeToPhone01=1,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=2]

#Repeat of the above change.

125514631: Sep 07 14:01:31.247 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_SaveContactsMsgProcessor-198-0-SaveContactsMsgProcessor] com.cisco.config.impl.ConfigStubImpl configStubImpl-replace() exporting object: DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=912145551234,callbackDateTime=Tue Mar 05 20:30:30 CST 2024,callStatus=4,callResult=8,callResult01=8,callResult02=0,callResult03=0,lastNumberDialed=4,callsMadeToPhone01=1,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=0]

#Repeat of the above change.

125635076: Sep 07 14:15:54.719 CST %MIVR-CFG_MGR-7-UNK: [MIVR_SS_OB_SaveContactsMsgProcessor-198-0-SaveContactsMsgProcessor] com.cisco.config.impl.ConfigStubImpl configStubImpl-replace() exporting object: DialingListConfig[schema=DialingListConfig#4,time=2024-03-05 13:53:13.0,recordId=15541,desc=,recordID=0,dialingListID=15541,campaignID=30,accountNumber=5008DM,firstName=Other.wav,lastName=Agent,phone01=912145551234,phone02=,phone03=,gmtZonePhone01=92,dstPhone01=true,gmtZonePhone02=-1,dstPhone02=true,gmtZonePhone03=-1,dstPhone03=true,callbackNumber=912145551234,callbackDateTime=2024-03-05 20:30:30.0,callStatus=3,callResult=1,callResult01=8,callResult02=0,callResult03=0,lastNumberDialed=4,callsMadeToPhone01=1,callsMadeToPhone02=0,callsMadeToPhone03=0,numMissedCallback=0,retry=false,callsMadeToCallbackNum=1]

- So now how do you confirm that the record is complete and there will be no more callbacks. Let’s look at the log lines above and zero in on callStatus and callResult. Status (3:Closed, 4:Callback). Result (1:Voice, 8:Callback). You need to look at all the lines to get a picture if there will be another call or not. In our case the last log found has a status of close and a result of voice with no new records. Signifying that this is now close and done. I wish it was more clear, but this is the best I could find.

125445090: …callStatus=3,callResult=1,callResult01=1…

125451126: …callStatus=4,callResult=8,callResult01=8…

125451194: …callStatus=4,callResult=8,callResult01=8…

125508618: …callStatus=3,callResult=1,callResult01=8…

125514552: …callStatus=4,callResult=8,callResult01=8…

125514631: …callStatus=4,callResult=8,callResult01=8…

125635076: …callStatus=3,callResult=1,callResult01=8…You can find all values here.

~david

You must be logged in to post a comment.